OpenAI vs. Google vs. Azure: A Speech-to-Text Battle

(This article was fully updated on 7th September 2023 to reflect new test results from OpenAI’s speech-to-text API.)

Recently, I had to do a comparison between Google’s, Azure’s and OpenAI’s speech-to-text performance and thought it might benefit others. Here’s a breakdown of my process and the results.

Tools and Resources

Before diving into the results, let’s look at the tools I used:

- Google’s STT Test Tool: Google Console

- Azure’s STT Test Tool: Microsoft Speech Portal

- OpenAI with Postman directly to their API: Speech to text API

- Word Error Rate (WER) Calculator: This tool by Amberscript was very helpful in deriving results.

The inspiration for this test stemmed from a research paper I stumbled upon. This paper, in turn, led me to some intriguing “language corpus” databases, such as OpenSLR and Tensorflow’s Common Voice, which could be instrumental for future automated benchmarking. That last one goes in my ever-growing to-do list 🤓.

Methodology

- Test Sentence Generation: I used ChatGPT to generate some unique test sentences for this experiment.

- Recording: I then called my test IVR system in Anywhre365 Dialogue Cloud, and during the call, I recorded these sentences.

- Transcription: Post-recording, I ran these sentences through the STT engines.

- Evaluation: The transcribed results were then passed through the WER calculator to determine their accuracy.

Test Sentences

I asked ChatGPT to generate some sentences that are relevant for the banking and insurance sector and also a few for Anywhere365. This way I have some sentences with SWIFT codes etc., but also custom product names to test the phrase set functionality that OpenAI, Azure and Google offer.

Test Configuration

Here’s a rundown of the configurations I tested:

- Google’s current default model: This was intended to serve as our baseline. I did not use any phrase set for this.

- Google’s phone model with a phrase set: An upgrade from the default tuned for phone calls.

- Azure real-time speech to text service with a phrase set. Punctuation was set to enabled as default so I left it on.

- OpenAI has one model where you can pass a limited set of words similar to the phrase set functionality of the other engines. It looks like it has punctuation on by default.

For consistency, I used the same phrase set across all tests, which included the terms: Dialogue Studio, Snapper, Dialogue Intelligence, Attendant, and WebAgent.

I intentionally left out Google’s short and long model, as preliminary tests showed that results closely mirrored those of the phone model. The difference is whether long or short audio clips are used which is irrelevant for my test.

Results

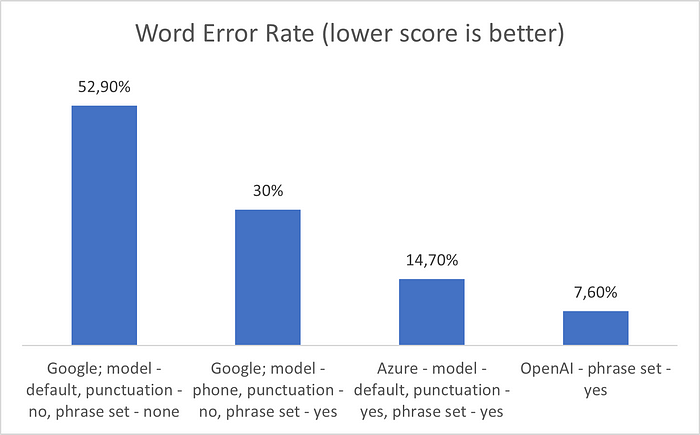

The standard WER score served as the metric I used, where lower scores are better. For context, human transcription accuracy is at around a 5–10% WER.

Here is an Airtable with all sentences and how the different engines heard them:

- Google’s Default Model: As expected, this served as a good benchmark. However, the results were far from perfect as this model struggled the most with a WER of 52.90%. Some transcriptions were bewilderingly off, translating “The IBAN for the foreign transaction seemed wrong.” to a perplexing “VI bamboo flooring transactions in the wrong”, even occasionally veering into unintended profanities 🙊.

- Google’s Phone Model with Phrase Set: The addition of the phrase set and the switch to the phone model led to a significant improvement, this configuration achieved a WER of 30%. The transcriptions were clearer, and the accuracy rate was noticeably higher though still failing at IBAN or spelling out letters.

- Azure with Phrase Set: Azure’s performance was commendable achieving a WER of 14.70%. While it consistently outperformed the Google models, it too struggled with spelling things out though to a lesser extent.

- OpenAI Speech to text: The results were impressive, especially when compared to the other engines I tested. With a WER score of just 7,60% OpenAI’s transcription API outperformed both Google and Azure in this test. The ability to accurately transcribe specific codes, like the SWIFT code, sets OpenAI’s model apart, making it a strong contender for applications that require high precision.

Comparative Analysis On Key Categroies

Complex Terms and Acronyms:

- Google (Default Model): Struggled with terms like “IBAN” and “SWIFT”, transcribing them as “VI bamboo flooring” and “Swift Code”, respectively. It totally failed at spelling out things like the original “The SWIFT code is MNPVW44, or did I say MNBVW44?” was turned into “this beast called is M & B V W for 4 a.m. nbw 44” 🫠

- Google (Phone Model): Improved but still had issues, e.g., “Swift Code” and “I button” for “IBAN” and failed at spelling codes etc. with “the Swift code is a and e v w 4 4 or did I say m n b r e w 44”.

- Azure: Better performance with both “SWIFT” and “IBAN” transcribed correctly but the code was still not presented as one string of letters “The SWIFT code is MNB VW44 or did I say MBV W-44?”.

- OpenAI: Accurately transcribed both “IBAN” and “SWIFT” and was the only one that got the code itself as one string “The SWIFT code is MNBVW44, or did I say MNBVW44?”. Though it failed at the first one mishearing a P as a B. That was an intentionally tricky one though and I do not think a human would have done better either. Really good job!

Numbers and Amounts:

- Google (Default Model): Misinterpreted the sentence but got the amount correct “Anita bank and my blunt if you $11,234.56”. Though this result might get you in trouble for other reasons.

- Google (Phone Model): Improved to “interbank fund movement if you $11,234.56” still getting the ammount correct but the sentence not so much.

- Azure: Accurate with “$11,234.56” just messed up the “SWIFT code” wtih “SWIFT quote”.

- OpenAI: Perfectly transcribed the ammount and the sentence.

Product Names:

Here the phrase sets come into play so models that do not support them like the Google default one will of course have a bad result.

- Google (Default Model): Struggled with names like “Dialogue Studio” and “WebAgent”, transcribing them as “dialog studio” and “whip agency”.

- Google (Phone Model): Improved but still had “dialogue studio” and “webagent” with wrong capitalization of the letters.

- Azure: Accurate with “Dialogue Studio” and “WebAgent”.

- OpenAI: Perfectly transcribed both “Dialogue Studio” and “WebAgent”.

Complex Sentences:

- Google (Default Model): Had issues with sentences like “The insured’s policy code is FGHP456, can you reconfirm the details?”, transcribing it as “the insurance policy called is thp 456 can you reconfirm the details”.

- Google (Phone Model): Improved but still had “the insurance policy code is v t h p 456”.

- Azure: Close with “The insurance policy code is F GH456”.

- OpenAI: Perfectly transcribed as “The insured’s policy code is FGHP456, can you reconfirm the details?”. This is really impressive as it was the only model that wrote the code not as separate letters.

Homophones and Similar Sounds:

Interestingly, none of the models took the bait in a sentence concluding with the word ‘ship’ 🤣.

- Google (Default Model): Struggled with “principal dental coverage”, transcribing it as “reservation for the principal dental coverage”.

- Google (Phone Model): Improved to “valuation for the principal dental coverage”.

- Azure: Transcribed as “evaluation for the principal dental coverage”.

- OpenAI: Perfectly transcribed as “valuation for the principal dental coverage”.

Real-life Importance:

- Google (Default Model): Had significant errors that could lead to misunderstandings in real-life scenarios, such as misinterpreting “SWIFT code” and “IBAN”.

- Google (Phone Model): Improved but still had potential pitfalls, especially with crucial terms.

- Azure: Offered a commendable performance but still had minor issues that could be problematic in high-stakes scenarios.

- OpenAI: Demonstrated high accuracy, making it a reliable choice for real-world applications where precision is paramount.

Final Thoughts

My limited tests indicate that the advertised WER scores of around 5% for Google and Microsoft Azure do not match reality. I suspect that both Google and Microsoft might use the same datasets for training and testing, which could produce favorable results.

I also believe that the WER (Word Error Rate) scoring is not the best tool in this context, as it treats all words equally. However, accurately transcribing my IBAN or SWIFT code is far more crucial than correctly transcribing a preposition.

It’s essential to note that the accuracy of these engines can vary based on the speaker’s accent and clarity. Native speakers might achieve even better results, but it’s vital to remember that not every user will be a native English speaker, just as I am not.

In real-world scenarios, callers might need to enunciate intricate details, which, as we observed, can be challenging. I’m actually surprised that transcribing individual letters turns out to be so difficult and only OpenAI got it mostly correct. Variations in pronunciation, linguistic nuances, and regional dialect differences (consider German spoken in Austria or Switzerland vs. Germany) introduce even more layers of complexity.

While the scores were revealing, from a broader perspective, neither Google’s or Microsoft’s engine, on its own, is currently ideal for creating a flawless STT (Speech-to-Text) based customer self-service experience. The only one that comes close is the engine from OpenAI, however, it does not support streaming at the moment. I will be monitoring how things develope once it gets included in the Azure OpenAI offering.

My next task is to examine how this can be improved by routing the results through an NLU (Natural Language Understanding) engine that is trained based on the expected context of the conversation. However, I’m still uncertain about how this will help when a ‘P’ is misheard as a ‘B’.

TL;DR OpenAI’s transcription model consistently showcased superior accuracy across various categories. Its ability to handle complex terms, numbers, product names, and intricate sentence structures makes it a standout choice for applications requiring high precision. OpenAI didn’t stutter.

Below you can find my lovely voice in the recordings I used.

Recording 1 with the first sentences with banking and insurace:

That one I had to shorten so that it fits in the 1 minute free limit with Azure.

Recording 2 with the sentences with custom words: